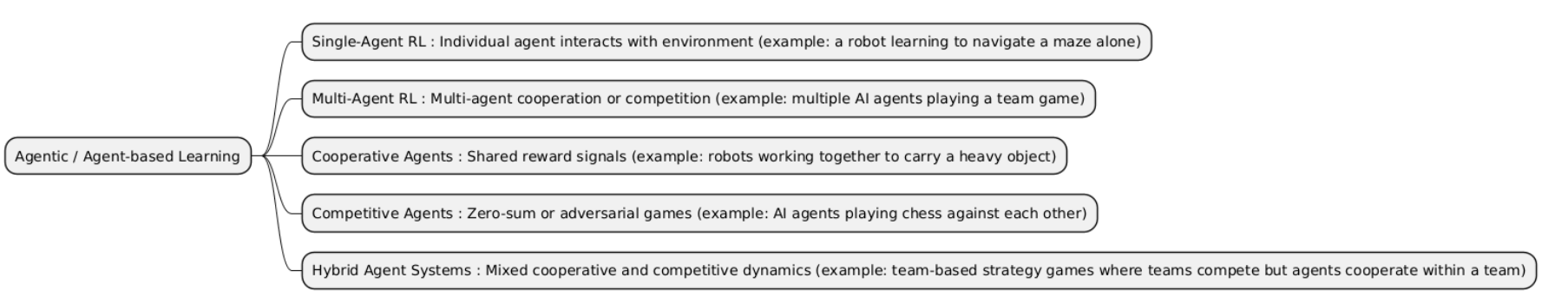

Agentic (or Agent-based) Learning is a type of machine learning where autonomous agents interact with an environment to learn behaviors or strategies. Each agent makes decisions, observes outcomes, and adapts over time, often used in simulations, multi-agent systems, and reinforcement learning scenarios.

| Type | What it is | When it is used | When it is preferred over other types | When it is not recommended | Examples of projects that is better use it incide him |

|---|---|---|---|---|---|

| Single-Agent RL | Single-Agent Reinforcement Learning (Single-Agent RL) involves one agent interacting with an environment to learn a policy that maximizes cumulative reward. The agent learns from its own actions and feedback, without other agents influencing the environment. | Used when the task only requires one decision-maker or actor in the environment, and there are no other intelligent agents affecting outcomes. |

• Better than Multi-Agent RL when there is only one controllable entity and no need to model interactions with others. • Simpler and more stable to train than cooperative or competitive multi-agent setups. • Ideal for isolated optimization problems. |

• Not suitable when multiple agents interact or compete in the same environment. • Avoid if you need coordination or negotiation between agents. • Less effective for complex environments with multiple stakeholders. |

• Autonomous robot navigation in a static environment. • Stock trading agent making decisions independently. • Game AI for single-player puzzles or simulations. |

| Multi-Agent RL | Multi-Agent Reinforcement Learning (Multi-Agent RL) involves multiple agents interacting within the same environment. Agents may cooperate, compete, or both, and each learns a policy considering the actions of others. | Used when several decision-makers affect the environment, and their interactions are critical to task performance. |

• Better than Single-Agent RL when environment dynamics depend on multiple agents. • More suitable than isolated single-agent setups for coordination, competition, or negotiation tasks. • Ideal for modeling complex social or multi-actor systems. |

• Not advised for simple, isolated problems with only one agent. • Avoid when scalability is limited, as training multiple agents can be computationally intensive. • Difficult if the environment is highly stochastic, making convergence slow. |

• Autonomous vehicle coordination at intersections. • Multi-robot warehouse management for task allocation. • AI agents in multiplayer strategy games learning to cooperate or compete. |

| Cooperative Agents | Cooperative Agents are a type of Multi-Agent Reinforcement Learning where multiple agents work together toward a shared goal, learning policies that maximize joint rewards rather than individual gains. | Used when collaboration among agents is required to achieve a task that a single agent cannot complete efficiently alone. |

• Better than Single-Agent RL when teamwork or shared objectives matter. • Better than general Multi-Agent RL when the focus is purely cooperation, not competition. • Ideal for resource allocation, coordination, or swarm robotics. |

• Not suitable when agents need to compete or pursue individual goals. • Avoid for tasks solvable efficiently by a single agent. • Can be complex to train if joint reward signals are sparse or delayed. |

• Drone swarm for search-and-rescue, coordinating to cover an area. • Multi-robot warehouse automation, optimizing package delivery together. • Collaborative AI assistants solving complex tasks jointly. |

| Competitive Agents | Competitive Agents are a type of Multi-Agent Reinforcement Learning where multiple agents compete against each other, each aiming to maximize its own reward potentially at the expense of others. | Used when the environment involves rivalry or adversarial interactions, and the success of one agent may reduce the success of others. |

• Better than Single-Agent RL when the task involves opponents or adversaries. • Better than Cooperative Agents when the focus is on competition rather than collaboration. • Ideal for games, markets, or adversarial simulations. |

• Not suitable for collaborative tasks where agents must cooperate. • Avoid when the environment is static and predictable, solvable by a single agent. • Training can be unstable if competitive dynamics are highly complex. |

• AI for two-player board games (e.g., chess, Go). • Autonomous car racing where agents compete for best lap times. • Simulated trading agents in financial markets competing for profit. |

| Hybrid Agent Systems | Hybrid Agent Systems combine cooperative and competitive agents within the same environment. Agents may collaborate in some tasks while competing in others, learning policies that balance both objectives. | Used when the environment requires a mix of collaboration and competition among multiple agents, reflecting real-world multi-agent dynamics. |

• Better than Single-Agent RL when multiple interacting agents exist. • Better than pure Cooperative or Competitive Agents when tasks involve both shared goals and rivalry. • Ideal for complex social simulations, economic modeling, or multi-team strategy games. |

• Not suitable for simple tasks solvable by one agent or purely cooperative/competitive setups. • Avoid when training resources are limited, as balancing multiple objectives can be computationally intensive. • Can be hard to converge if reward signals for cooperation and competition conflict. |

• Team-based multiplayer strategy games where teams cooperate internally but compete against other teams. • Autonomous driving in mixed traffic, where some vehicles cooperate while others act competitively. • Resource management simulations with multiple organizations balancing competition and collaboration. |

import numpy as np

import random

# Define environment

states = 5 # positions 0 to 4

actions = [0, 1] # 0 = left, 1 = right

goal = 4

# Q-table initialization

Q = np.zeros((states, len(actions)))

# Hyperparameters

alpha = 0.1 # learning rate

gamma = 0.9 # discount factor

epsilon = 0.2 # exploration rate

episodes = 50

# Q-learning loop

for episode in range(episodes):

state = 0 # start at position 0

done = False

while not done:

# Choose action (epsilon-greedy)

if random.uniform(0, 1) < epsilon:

action = random.choice(actions)

else:

action = np.argmax(Q[state])

# Take action

next_state = state + 1 if action == 1 else state - 1

next_state = max(0, min(states - 1, next_state))

# Reward

reward = 1 if next_state == goal else 0

# Q-learning update

Q[state, action] = Q[state, action] + alpha * (reward + gamma * np.max(Q[next_state]) - Q[state, action])

state = next_state

if state == goal:

done = True

# Show learned Q-table

print("Learned Q-table:")

print(Q)

# Test agent

state = 0

steps = [state]

while state != goal:

action = np.argmax(Q[state])

state = state + 1 if action == 1 else state - 1

state = max(0, min(states - 1, state))

steps.append(state)

print("Agent path to goal:", steps)

import numpy as np

import random

# Environment

states = 5 # positions 0 to 4

actions = [0, 1] # 0 = left, 1 = right

goal = 4

# Initialize Q-tables for 2 agents

Q_agent1 = np.zeros((states, len(actions)))

Q_agent2 = np.zeros((states, len(actions)))

# Hyperparameters

alpha = 0.1

gamma = 0.9

epsilon = 0.2

episodes = 50

for episode in range(episodes):

state1 = 0

state2 = 0

done1 = False

done2 = False

while not (done1 and done2):

# --- Agent 1 ---

if not done1:

if random.uniform(0, 1) < epsilon:

action1 = random.choice(actions)

else:

action1 = np.argmax(Q_agent1[state1])

next_state1 = max(0, min(states - 1, state1 + 1 if action1 == 1 else state1 - 1))

reward1 = 1 if next_state1 == goal else 0

Q_agent1[state1, action1] += alpha * (reward1 + gamma * np.max(Q_agent1[next_state1]) - Q_agent1[state1, action1])

state1 = next_state1

if state1 == goal:

done1 = True

# --- Agent 2 ---

if not done2:

if random.uniform(0, 1) < epsilon:

action2 = random.choice(actions)

else:

action2 = np.argmax(Q_agent2[state2])

next_state2 = max(0, min(states - 1, state2 + 1 if action2 == 1 else state2 - 1))

reward2 = 1 if next_state2 == goal else 0

Q_agent2[state2, action2] += alpha * (reward2 + gamma * np.max(Q_agent2[next_state2]) - Q_agent2[state2, action2])

state2 = next_state2

if state2 == goal:

done2 = True

# Show learned Q-tables

print("Agent 1 Q-table:\n", Q_agent1)

print("Agent 2 Q-table:\n", Q_agent2)

# Test paths

def test_agent(Q):

state = 0

path = [state]

while state != goal:

action = np.argmax(Q[state])

state = max(0, min(states - 1, state + 1 if action == 1 else state - 1))

path.append(state)

return path

print("Agent 1 path:", test_agent(Q_agent1))

print("Agent 2 path:", test_agent(Q_agent2))